Integrating Serverless API with Applications

The AI Model Hub offers a wide range of model APIs, allowing you to seamlessly integrate their powerful capabilities into your applications via simple API calls. This guide introduces popular applications that support Serverless API integration, along with general configuration instructions.

Supported Applications

These applications run on platforms you use daily—desktop clients for Windows and Mac, web apps, browser extensions, and mobile apps. By leveraging these tools, you can unlock the full potential of Serverless API models and enhance your AI experience. Below are the application links and brief descriptions:

| Category | Application Name | Description |

|---|---|---|

| Desktop Clients | Chatbox | A desktop client supporting multiple popular LLMs, available for Windows, Mac, and Linux. |

| OpenCat | An AI chat client for iOS and macOS devices. | |

| Dify | An open-source LLM application development platform that combines backend-as-a-service and LLMOps principles, enabling developers to rapidly build production-grade generative AI applications. | |

| Nextchat | A self-hosted chat service that can be deployed on your own server with minimal setup. | |

| Pal Chat | An AI assistant designed for iPhone and iPad. | |

| Enconvo | An AI launcher and intelligent assistant that serves as a unified entry point for all AI functionalities on macOS. | |

| Cherry Studio | A desktop AI assistant built for creators. | |

| Browser Plugins | Immersive Translate | A clean and efficient browser extension for side-by-side bilingual web page translation. |

| ChatGPT Box | Integrates LLMs as a personal assistant directly into your browser. | |

| HuaCi Translate | A browser translation plugin that combines multiple translation APIs and LLM services. | |

| Oulu Translate | A feature-rich translation tool offering mouse-highlight search, paragraph-level side-by-side translation, and PDF document translation, supporting engines like DeepSeek AI, Bing, GPT, and Google. | |

| IM Assistants | HuixiangDou | A domain-specific knowledge assistant integrated into WeChat or Feishu groups, focused on answering questions without casual chatter. |

| QChatGPT | A highly stable, plugin-enabled, real-time web-connected LLM bot for QQ, QQ Channel, and OneBot platforms. | |

| Development | Continue | An open-source IDE extension that uses LLMs as your programming copilot. |

| Cursor | A VS Code-based AI-powered code editor designed for developers, emphasizing "AI pair programming" where the AI acts as your coding partner. |

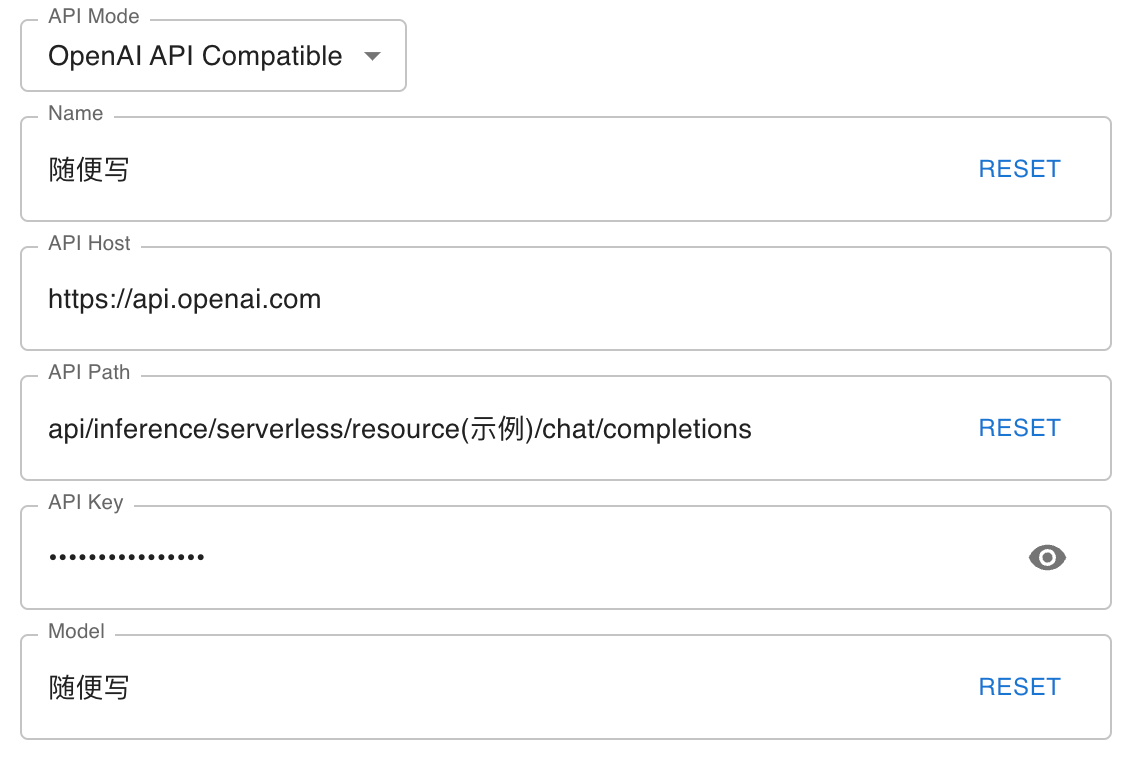

All listed applications support integration with Serverless API. Configuration is straightforward: since Serverless API is compatible with the OpenAI API format, simply select OpenAI API Compatible when configuring the model provider. You'll typically need to provide the API endpoint and API key. Follow the steps below:

Integration Steps

Obtain the API Endpoint

On the Serverless API page, select your desired model and click "Call" to retrieve the API endpoint:

https://moark.ai/v1/chat/completions

Configure the API Endpoint

Using the URL above, split it into the following components:

- API Host (Domain): https://moark.ai

- API Path: /v1/chat/completions

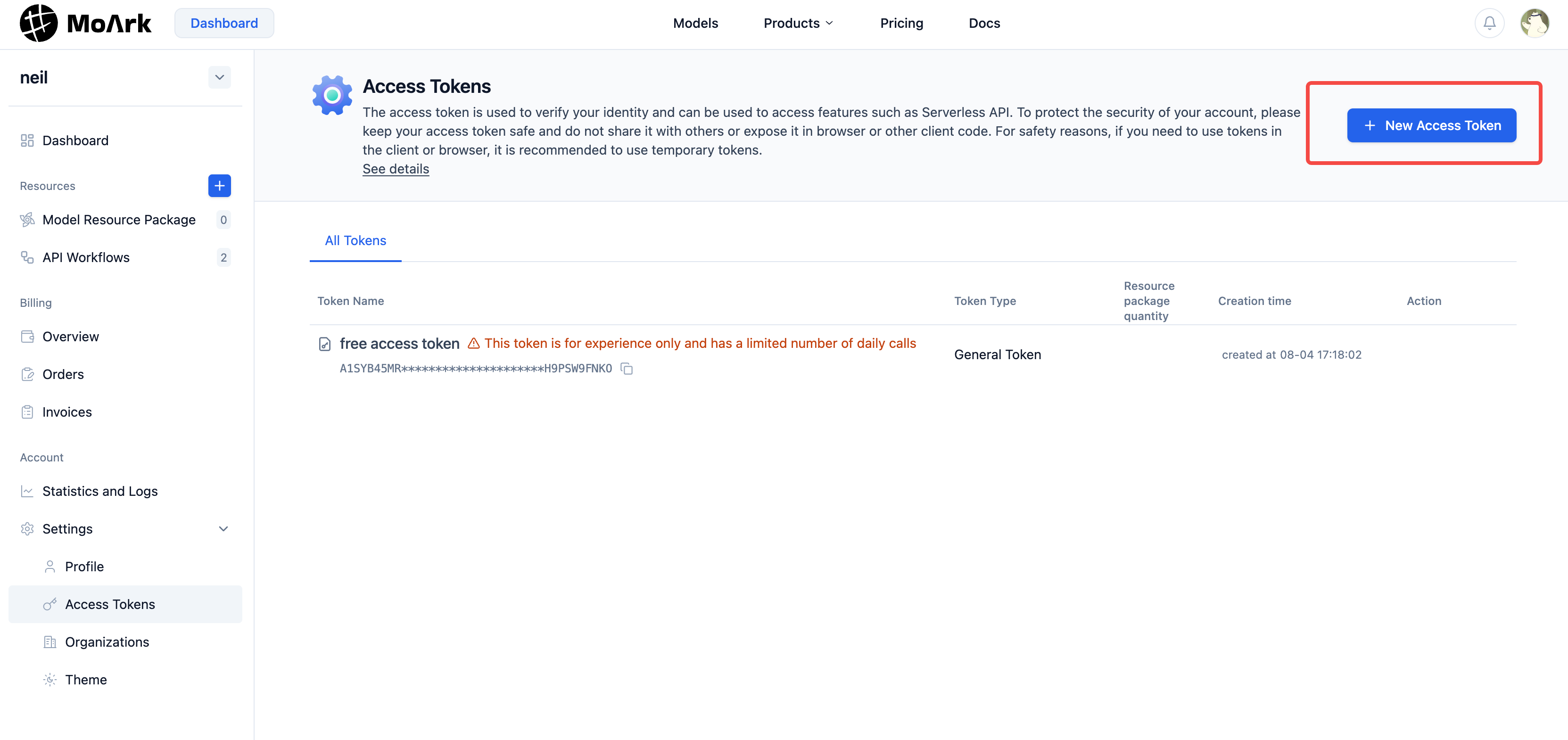

Generate an Access Token

Go to Dashboard -> Settings -> Access Tokens to create your access token.

In scenarios where the client directly calls the Serverless API, you may need to pass the Access Token to the frontend. For security, we recommend storing the token on your backend and routing API calls through your server.

If you must use the token directly on the client side, MoArk provides an API to generate temporary tokens. For details, refer to Create Temporary Access Token.